Making Java app behavior consistent in different environments

The behaviour of a process is usually partly dependent on the environment where the process is being executed. Namely, the default time zone, line separator, locale, charset are picked by a JDK1 from the environment in which it is being used, which is usually an operating system's shell (whether a command-line interface (CLI) or a graphical user interface (GUI)). If we are developing a CLI utility that is used primarily in conjunction with other CLI utilities, e.g., as part of a Bash pipeline, it may be important for such an application to behave in accordance with the environment to improve interoperability with other utilities. However, if we are developing an application that is supposed to run on its own, we may want to make it behave the same way in different environments. The Java SE API allows to explicitly specify all the aforementioned, thus, overriding the default values defined by the environment, for example:

LocalDateTime.now()vs.LocalDateTime.now(ZoneId zone)

see also "Pitfalls with JDBCPreparedStatement.setTimestamp/ResultSet.getTimestamp"String.lines()vs.Scanner.useDelimiter(Pattern pattern).tokens()String.format(String format, Object... args)vs.String.format(Locale l, String format, Object... args)PrintStream(OutputStream out, boolean autoFlush)vs.PrintStream(OutputStream out, boolean autoFlush, Charset charset)

However, in any complex project programmers often forget to explicitly specify the environment-specific values. It is also not very convenient to always have to specify them explicitly, and may not always be possible when using 3rd-party APIs. So it is worth specifying environment-independent application-wide defaults at least as a safeguard mechanism.

Contents

Setting defaults

Time zone

The default java.util.TimeZone is accessible via the methods

TimeZone.getDefault()/TimeZone.setDefault(TimeZone zone),

and can be set as shown below

TimeZone.setDefault(TimeZone.getTimeZone(ZoneId.from(ZoneOffset.UTC)));

Line separator

Any string/text is simply a sequence of abstract characters. A line is a concept that is not intrinsic to a string/text and is rather added on top of it as a basic way to markup the text for the purpose of separating different pieces from each other to facilitate human perception. On paper or on a screen we display different lines by spatially separating them. In a logical system, the information about where a line ends is represented by specially designated control, a.k.a. non-printing/nongraphic, characters or sequences of them, called line separators, injected in the text.

The Java SE API provides two ways of accessing the default line separator: either directly via the standard line.separator

Java system property

or by using the method java.lang.System.lineSeparator().

Note that the method System.getProperties()

states

"Changing a standard system property may have unpredictable results unless otherwise specified. Property values may be cached during initialization or on first use. Setting a standard property after initialization … may not have the desired effect."

So the only reliable way of setting the default line separator is by specifying the value of the line.separator Java system property when starting a JVM process.

Once this is done, methods like java.io.PrintStream.println()

will use the specified value. Specifying a Java system property when starting a JVM process is shell-specific, here is how this can be done

when using the java/javaw launcher in

- Bash

$ java -Dline.separator=$'\n'See Bash ANSI-C Quoting for the details about the

$'\n'syntax. By the way, the JLS specifies a similar way of escaping nongraphic characters. - PowerShell

> java -D'line.separator'="`n"See PowerShell About Special Characters for the details about the

"`n"syntax.

Locale

The default java.util.Locale is accessible via the methods

Locale.getDefault()/Locale.setDefault(Locale newLocale),

and can be set as shown below

Locale.setDefault(Locale.ENGLISH);

Charset

The default java.nio.charset.Charset can be obtained via the method

Charset.defaultCharset(),

but the Java SE does not provide any way of setting the default charset. As of

JEP 400: UTF-8 by Default, the Java SE API uses

UTF-8 as the

default charset,

except for the

java.io.Console

API, where the

charset

must match the operating system's shell charset.

Before JEP 400, i.e., before OpenJDK JDK 18, one could set the default charset to UTF-8 via

the Java system property file.encoding, but this property is an implementation detail and is not

part of the Java SE.

The very minimal functionality that relies on the default charset and is used either directly or indirectly by virtually all Java applications

is the standard System.out and System.err PrintStreams.

We can specify the Charset used by these two PrintStreams as follows:

System.setOut(new PrintStream(System.out, true, StandardCharsets.UTF_8));

System.setErr(new PrintStream(System.err, true, StandardCharsets.UTF_8));

Note that the constructor

of the PrintStream class takes an OutputStream,

which is charset-agnostic because it does not operate on characters. The approach specified above works despite System.out/System.err

being PrintStreams and, thus, having their own charsets specified, because they are treated as OutputStreams by the constructor of the PrintStream class.

Note also, that the fields System.out/System.err are declared as static final, and yet the methods

System.setOut(PrintStream out)/System.setErr(PrintStream err)

somehow write to these fields after they are initialized. Does not this violate

the JLS 4.12.4. final Variables "may only be assigned to once"

semantics?

It does, but this exception is allowed by the JLS 17.5.4. Write-Protected Fields.

Example

ConsistentAppExample.java is a tiny Java application that demonstrates the aforementioned techniques.

We can start it in Bash running in macOS or Ubuntu

using the source-file mode (see also JEP 330: Launch Single-File Source-Code Programs):

$ java -Dline.separator=$'\n' -Dfile.encoding=UTF-8 ConsistentAppExample.java

charset=UTF-8, console charset=UTF-8, native charset=UTF-8, locale=en, time zone=UTC, line separator={LINE FEED (LF)}

Charset smoke test: latin:english___cyrillic:русский___hangul:한국어___math:μ∞θℤ

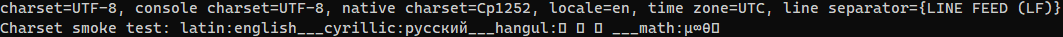

or in PowerShell running in Windows from Windows Terminal2:

> java -D'line.separator'="`n" -D'file.encoding'=UTF-8 ConsistentAppExample.java

charset=UTF-8, console charset=UTF-8, native charset=Cp1252, locale=en, time zone=UTC, line separator={LINE FEED (LF)}

Charset smoke test: latin:english___cyrillic:русский___hangul:한국어___math:μ∞θℤ

Be aware that starting a Java application using the source-file mode

introduces an additional activity where the default platform charset plays a role—the source file

"bytes are read with the default platform character encoding that is in effect."

3

This can be avoided if we compile the source files at first with explicitly specifying the source file charset via the documented

javac -encoding option,

and then start the application from the resulting class file,

which has "hardware- and operating system-independent binary format"

.

Using iconv to convert between charsets

As a bonus topic, which is to some extent related to the main topic of the article, I would like to mention the

iconv POSIX CLI utility for converting between charsets.

We have two CLI Java programs represented as "shebang" files:

inShellOutUtf16that reads character data from the stdin using the charset specified by the shell and writes the data to the stdout using UTF-16 charset;inUtf8OutShellthat reads character data from the stdin using the UTF-8 charset and writes the data to the stdout using the charset specified by the shell.

If we try feeding the stdout of the first to the stdin of the second in Bash running in macOS or Ubuntu, we see

$ echo 'Hello 🌎!' | ./inShellOutUtf16 | ./inUtf8OutShell

��Hello �<�!

Apparently, the pipeline is not working correctly.

We may fix it using iconv:

$ echo 'Hello 🌎!' | ./inShellOutUtf16 | iconv -f UTF-16 -t UTF-8 | ./inUtf8OutShell

Hello 🌎!

You may notice that these programs use java.io.InputStreamReader and java.io.OutputStreamWriter with explicit charsets instead of setting the charset

on the System.out PrintStream as was shown above. This is because the Java SE API provides a straightforward tool for transferring character data

from a java.io.Reader to a

java.io.Writer—Reader.transferTo(Writer out).

It is important to note that we cannot use the method

java.io.InputStream.transferTo(OutputStream out)

because this way we would be transferring binary data

from the stdin to the stdout instead of transferring character data, which would break the semantics of the programs.

-

Java Platform

A Java Development Kit (JDK) is the common name for an implementation of the Java Platform, Standard Edition (Java SE) Specification. For example, here is a link to the Java SE 17 Specification, which formal name is the Java Specification Request (JSR) 392. Its key parts are

- Java Language Specification (JLS),

- Java Virtual Machine Specification (JVMS),

- Java SE API Specification;

there are other parts, e.g.,

Unfortunately, OpenJDK publishes mostly changed specifications with each Java SE release instead of publishing all of them. See the specifications published by Oracle for a full list of Java SE parts. Previously the "SE" part was used to differentiate between the "standard" Java Platform, the Java Platform, Micro Edition (Java ME), and the Java Platform, Enterprise Edition (Java EE). Java ME is dead, Java EE evolved into Jakarta Enterprise Edition Platform (Jakarta EE) after Java EE 8, thus, the "SE" qualifier is an atavism.

JRE

A subset of a JDK that is sufficient to run a Java application but is not sufficient to develop one is commonly named a Java Runtime Environment (JRE). The key part of any JDK or JRE is a Java Virtual Machine (JVM), it is responsible for hardware- and operating system–independence of any programming language compiled into JVM instructions called bytecodes (such languages are often called JVM languages). A JVM can be thought of as an emulator of a computing machine that understands the instruction set specified by JVMS Chapter 6. The Java Virtual Machine Instruction Set. The Java

classfile format is to a JVM as the Executable and Linking Format (ELF) / Portable Executable (PE) format is to a machine controlled by the Linux/Windows operating system respectively.OpenJDK

OpenJDK is a community whose main goal is developing an open-source implementation of the Java SE Specification. OpenJDK JDK is a proper name of the JDK developed by the OpenJDK community ("OpenJDK" is an adjective here according to JDK-8205956 Fix usage of “OpenJDK” in build and test instructions), but it is ridiculous and is usually shortened to just OpenJDK where it does not cause ambiguity. We may see the usage of the full name, for example, on the page How to download and install prebuilt OpenJDK packages:

"Oracle's OpenJDK JDK binaries for Windows, macOS, and Linux are available on release-specific pages of jdk.java.net…"

I find this naming confusing.So, the OpenJDK JDK is an implementation of the Java SE Specification. As a result of it being open-source, there are many other implementations that are based on it. Each implementation may have its own additional features not specified by the Java SE Specification. Both standard and nonstandard features included in each new release of the OpenJDK JDK are listed in the corresponding release page. They are called JDK Enhancement Proposals (JEPs), here is a link to the OpenJDK JDK 17 release page specifying all the JEPs included in this release.

One of the commercial JDKs based on OpenJDK JDK is Oracle JDK. We may see that the Java API they provide includes both the Java SE API and Oracle JDK–specific API. The Oracle JDK documentation and dev.java are great places to find the information you need when learning Java or developing with it.

-

It would have been nice if PowerShell just worked with UTF-8, but it does not. You need to use the following incantation, which I found here, to make it inputting and outputting UTF-8 correctly:

$OutputEncoding = [console]::InputEncoding = [console]::OutputEncoding = New-Object System.Text.UTF8EncodingI use PowerShell 7.2, other versions may behave differently. Note also that I see the specified fine results in PowerShell only when I run PowerShell in Windows Terminal. If I run PowerShell on its own, it displays

regardless of whether I use the Lucida Console font family or Cascadia Code.

-

This is why

-Dfile.encoding=UTF-8may be omitted in Bash running in macOS or Ubuntu$ java -Dline.separator=$'\n' ConsistentAppExample.java charset=UTF-8, console charset=UTF-8, native charset=UTF-8, locale=en, time zone=UTC, line separator={LINE FEED (LF)} Charset smoke test: latin:english___cyrillic:русский___hangul:한국어___math:μ∞θℤ, but when

-D'file.encoding'=UTF-8is omitted in PowerShell running in Windows, I get> java -D'line.separator'="`n" ConsistentAppExample.java charset=windows-1252, console charset=UTF-8, native charset=Cp1252, locale=en, time zone=UTC, line separator={LINE FEED (LF)} Charset smoke test: latin:english___cyrillic:руÑ�Ñ�кий___hangul:í•œêµì–´___math:μ∞θℤdespite the application putting UTF-8 bytes in the stdout due to the code

System.setOut(new PrintStream(System.out, true, StandardCharsets.UTF_8)). As we can see, the JDK detects that the environment charset isCp1252, which is weird, as Microsoft claims"In PowerShell 6+, the default encoding is UTF-8 without BOM on all platforms."

If we compile the Java code and then run it, then the application behaves as expected without-D'file.encoding'=UTF-8in PowerShell running in Windows:> javac -encoding UTF-8 stincmale\sandbox\examples\makeappbehaviorconsistent\ConsistentAppExample.java > java -D'line.separator'="`n" stincmale.sandbox.examples.makeappbehaviorconsistent.ConsistentAppExample charset=windows-1252, console charset=UTF-8, native charset=Cp1252, locale=en, time zone=UTC, line separator={LINE FEED (LF)} Charset smoke test: latin:english___cyrillic:русский___hangul:한국어___math:μ∞θℤ